This post has already been read 18828 times!

If we feel that today we have achieved a level of goodness across our supply network planning and operations, how do we target and mobilize toward greatness? The challenge for us in today’s competitive environment is how to evolve from ‘good’ to ‘great.’

We’ve gotten to ‘good’ by deploying the dominant technologies of today, but whose architectural design unfortunately perpetuates significant latency and inefficiency across the supply network. So while getting to ‘great’ involves a lot more than technology (as we will discuss in later posts), we still need the underlying technology platform to evolve in order to support ‘great’.

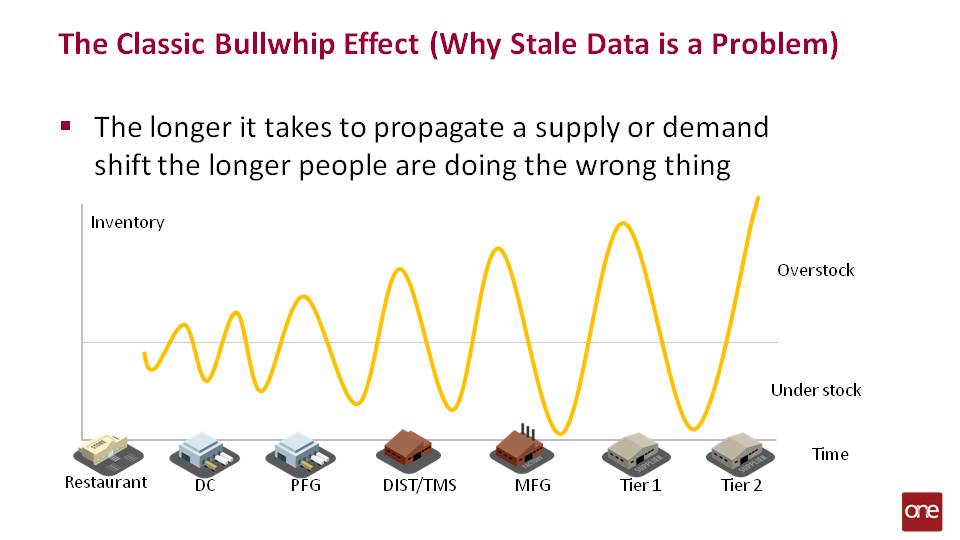

Overcoming Stale Data

Our traditional technology limitations were rooted in the typical ERP environment where the business user receives information that has gone through and across multiple software modules and thus has been re-optimized or reconciled several times. Therefore the data appeared to be accurate for the silo it resided within, but due to the architectural constraints that data (which is then used to make critical business decisions ) tends to be up to 3 weeks old when compared to the actual demand signal which triggered the data propagation across the supply network in the first place. While seemingly accurate within the silo because it has been crunched, massaged, and normalized, it is rarely accurate based on “today’s” market demands. Thus our option was to use data to make decisions based on where our demand or supply needs were 3 weeks ago rather than today.

When we can go to our business users and tell them they will receive real-time supply network information as of the last ten minutes, then at that point we will have deployed a technology platform which enables the supply network to get to ‘great’. Given that certain data will be available for synchronization at different times and frequencies under this paradigm, the architecture has been designed to optimize segments of the supply network while maintaining overall network integrity.

When we can go to our business users and tell them they will receive real-time supply network information as of the last ten minutes, then at that point we will have deployed a technology platform which enables the supply network to get to ‘great’.

Maintaining this overall network integrity, while optimizing supply network segments along with modeling large data sets and the associated measurements/analytics has changed the requirement for data representation. We no longer need to limit representative data in the supply chain based on traditional system or technology constraints. That is why successful cloud platforms employ a Hadoop based architecture with a horizontal grid computing deployment.

Advanced business measurement relies heavily on data accessibility. Real time process automation and management capabilities have changed the game on the types and fidelity of available data. In traditional architectures, accessibility was somewhat synonymous with integration, universal object definitions, and longevity. On the other hand, advanced cloud can provide a real time business process management layer which in turn provides full control and interaction while executing a transaction – through state change, milestone, and tracking event linkages. This contextualized process level data is fully accessible based on policy and permission control either directly or through the data warehouse.

To read more about this subject, I suggest you read the new whitepaper, Supply Chain’s New World Order”, where I discuss the cloud, S&OP, and why a holistic approach is needed for supply chain management.

- Generative AI: Force Multiplier for Autonomous Supply Chain Management - May 23, 2024

- Top 5 Signs Your Supply Chain is Dysfunctional - August 19, 2022

- Why a Network Model Makes Sense for Automotive Suppliers - July 30, 2019